The Great Outdoors Dataset: Off-Road Multi-Modal Dataset

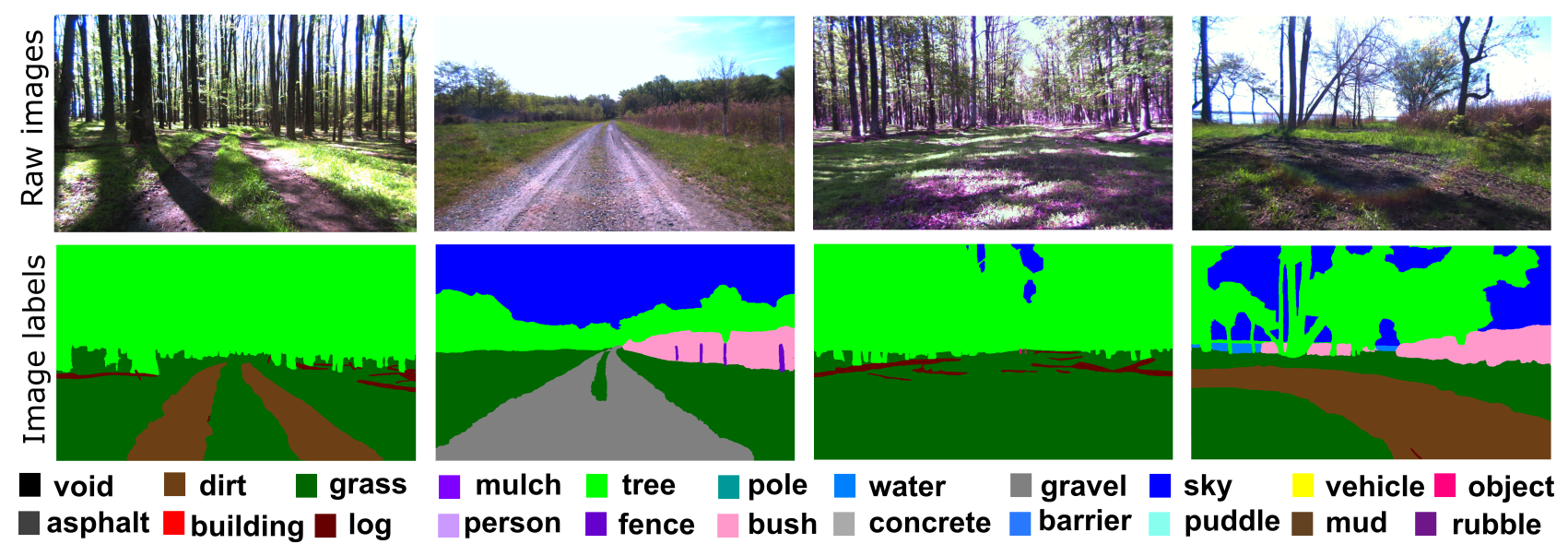

The Great Outdoors Dataset: Off-Road Multi-Modal Dataset is a comprehensive resource aimed at advancing autonomous navigation research in challenging off-road environments. Collected using an unmanned ground vehicle (UGV) designed for unstructured terrain, this dataset offers a rich combination of sensor data to support robust and safe navigation. The sensor setup includes a 64-channel LiDAR for detailed 3D point cloud generation, multiple RGB cameras for high-resolution visual capture, and a thermal camera for infrared imaging in low-visibility or night-time conditions. In addition, the dataset features data from an inertial navigation system (INS) that provides accurate motion and orientation measurements, a 2D mmWave radar for enhanced perception in adverse weather conditions, and an RTK GPS system for precise geolocation. The Great Outdoors Dataset places a strong emphasis on semantic scene understanding, addressing the gap in off-road autonomy research by offering multimodal data with annotated labels for 3D semantic segmentation. Unlike many existing datasets that focus on urban environments, this dataset is specifically tailored for off-road applications, providing a crucial resource for the development of advanced machine learning models and sensor fusion techniques. By building on the foundation of RELLIS-3D, it is designed to push the boundaries of autonomous navigation in unstructured environments, enabling the development of algorithms that can effectively navigate and perceive the complex dynamics of off-road settings.

Collaborators:

- Texas A&M University: Peng Jiang, Kasi Viswanath, Akhil Nagariya, George Chustz, Srikanth Saripalli

- CCDC Army Research Laboratory Maggie Wigness, Philip Osteen, Tim Overbye, Christian Ellis, Long Quang

License

All datasets and code on this page are copyrighted by us and published under the Creative Commons Attribution-NonCommercial-ShareAlike 3.0 License.